AI-Powered Ops: How I Tamed Our Detection Pipelines

The Before Times: A Tale of Too Many Tabs

Picture this: It's 3 AM, and PagerDuty's dulcet tones have just yanked you from a dream about refactoring that legacy Python codebase. The alert reads like a cryptic prophecy: "DetectionPipeline_AWS_SecurityEvents_v2 has failed with error: Task failed successfully." 🤔

Ah yes, the classic cryptic error. And while there's a potential future post on making these errors suck less, let's assume we can't control that and we are stuck with the status-quo when it comes to escalations.

In the pre-MCP era, my troubleshooting dance went something like this:

- Squint at PagerDuty - Try to decipher which of our 55+ multi-cloud Databricks workspaces this job lives in while my brain boots up to consciousness.

- The Great Tab Hunt - Open seventeen browser tabs trying to find the right Databricks environment (Was it prod-us-east? prod-us-west? qatarcentral? That one workspace I accidentally created that we're definitely going to migrate away from next quarter?)

- Navigation Nightmare - Click through Databricks' UI maze: Workflows → Jobs → Search → Finally find the job → Runs → Failed run → Error logs

- Context Loading - Stare at the error, realize I need to check the previous run (or the run before that), lose my place, start over.

- The Investigation - Check logs, realize I need to look at the cluster events, open another tab, check Slack for any related discussions, open another tab, check our runbook documentation, open another tab...

By the time I'd assembled all the context needed to actually debug the issue, I'd lost 10-15 minutes to pure navigation and context-switching overhead. Multiply this by 5-10 incidents per on-call shift, and you're looking at hours of toil that could've been spent on literally anything else.

The real killer wasn't the debugging itself—it was the cognitive load of maintaining mental state across a dozen different tools and interfaces. Each context switch meant rebuilding my understanding of where I was in the investigation. It was like trying to solve a jigsaw puzzle where someone kept moving the pieces every time you looked away.

Shifting attention between tasks comes at a steep cognitive price. Research shows that each switch not only slows us down but also drains mental resources, resulting in increased fatigue, reduced focus, and poorer decision-making. Chronic multitasking can eat up to 40% of productive time and leaves people more mentally exhausted and less effective at their work. Over time, the relentless cognitive load from frequent task switching can impair memory, lower overall performance, and contribute to long-term stress and even burnout.

So how do we make life better for the "runner"?

Enter MCP: The Hero We Needed

Model Context Protocol (MCP) servers are like having a really smart assistant who already knows where everything is in your infrastructure. Instead of teaching an AI how to navigate your specific setup every time, MCP servers provide a standardized way for AI assistants to interact with your tools programmatically.

During my last on-call rotation I sought to use an LLM agent enabled with MCP as a "tier 0" triager for all incoming escalations.

Setting up MCP for our infrastructure was surprisingly straightforward. Here's the gist:

PagerDuty MCP Server

{

"mcpServers": {

"pagerduty": {

"command": "uvx",

"args": ["mcp-server-pagerduty"],

"env": {

"PAGERDUTY_API_KEY": "your-api-key"

}

}

}

}

This little config gave Claude the ability to query incidents, check on-call schedules, and pull incident details directly. No more tab hunting for incident context.

Databricks MCP Server

The Databricks setup was where things got interesting. We have multiple workspaces across different regions, and keeping track of which detection job runs where was its own special form of suffering. The MCP server configuration looked like:

{

"databricks": {

"command": "python",

"args": ["-m", "dnr_mcp_server"],

"env": {

"DATABRICKS_CONFIG_FILE": "~/.databrickscfg"

}

}

}

But the real magic was in setting up workspace aliases in the Databricks config:

[azure-northeurope]

host = https://adb-12345.azuredatabricks.net

token = dapi-your-token-here

[aws-prod-east]

host = https://your-workspace.cloud.databricks.com

token = dapi-another-token

Now instead of remembering URLs that look like they were generated by a random number generator having a bad day, I could reference workspaces by meaningful names, eg "us-west-2".

The New Reality: Ops Rotation Nirvana

Here's how the same 3 AM page plays out now:

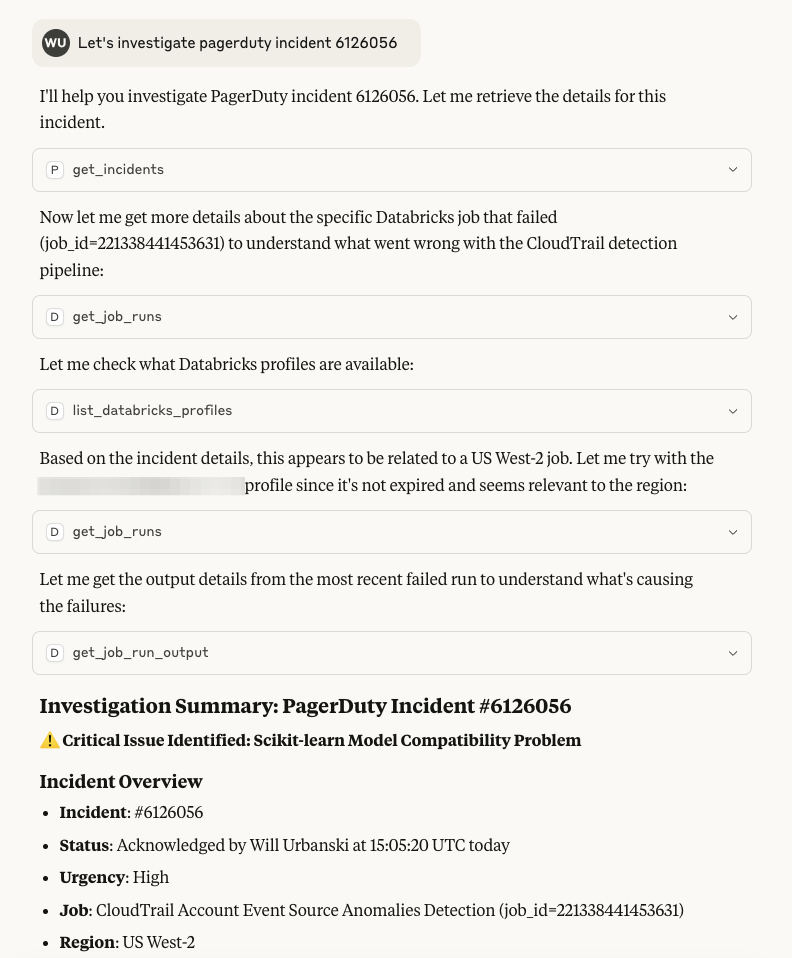

Me: "Hey Claude, what's going on with our PagerDuty incidents?"

Claude: checks PagerDuty "You have 2 triggered incidents. The most recent is 'DetectionPipeline_AWS_SecurityEvents_v2 failed' from 5 minutes ago."

Me: "Can you check what's happening with that Databricks job?"

Claude: seamlessly pivots to the correct workspace, finds the job, checks recent runs "The job has failed 3 times in the last hour. The most recent failure shows an OutOfMemoryError on the driver node. Looking at the cluster events, there was an autoscaling attempt that failed due to instance capacity issues in us-east-1."

Me: "What about previous successful runs? Any pattern?"

Claude: analyzes historical data "The last 5 successful runs processed between 50-60GB of data. Today's failed runs are trying to process 340GB. There's likely an upstream data pipeline issue causing this massive increase."

Total time: 2 minutes. Total tabs opened: 1 (this chat).

Real-World Win: I reduced Ops toil

Last week provided the perfect test case. When detection pipelines failed, or I received a page, I would simply ask Claude about them:

Pre-MCP me would have spent an hour assembling data from various sources. Instead, my workflow now looks like this:

Claude immediately pulled the job history, analyzed the failure patterns, checked the cluster events during those times, and even correlated it with our on-call schedule. The revelation? The failures coincided with an upgrade of scikit-learn in a model training pipeline, resulting in an incompatibility between the training and detection workflows.

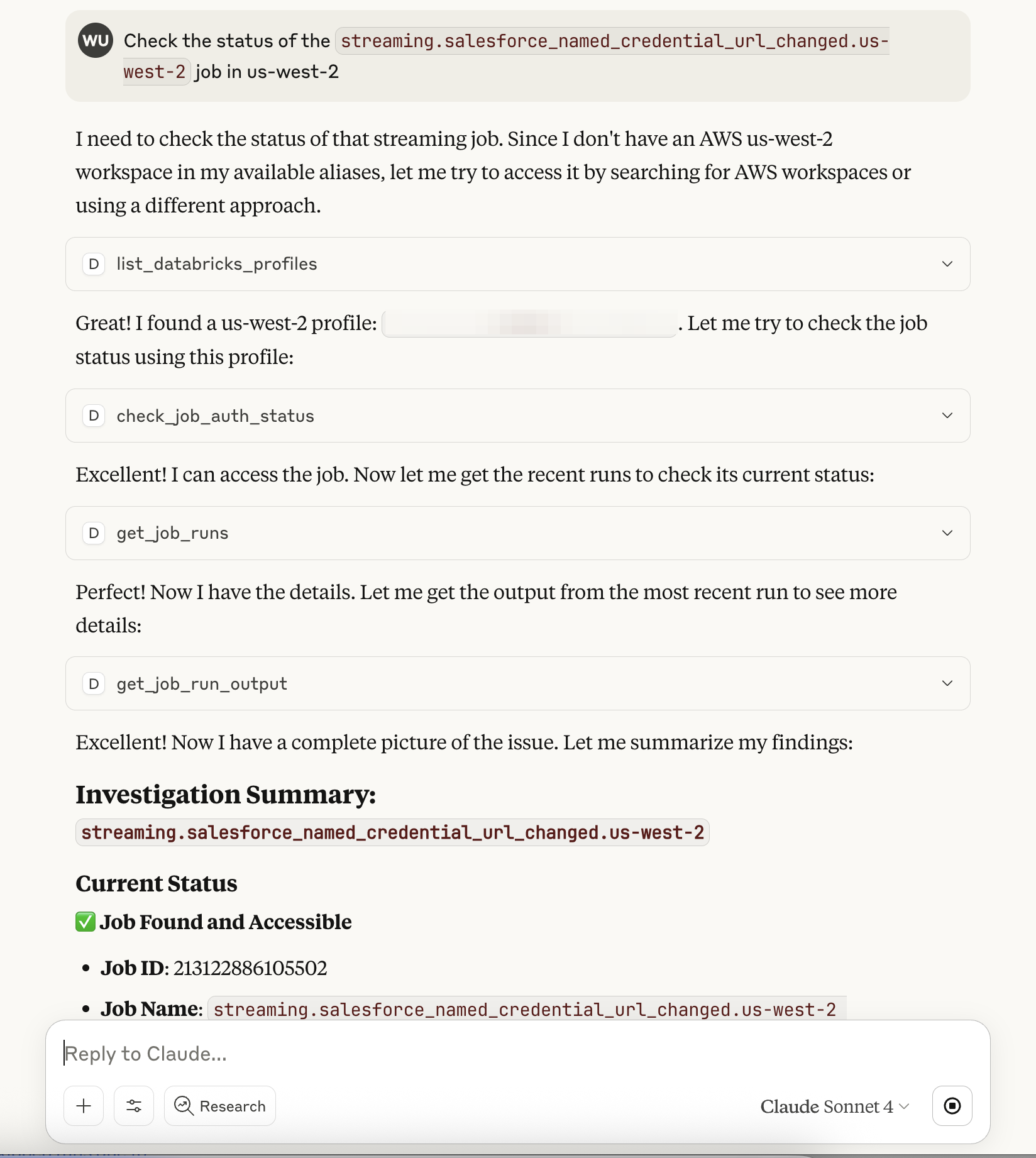

These improvements also enable natural language investigation of workflows and regions:

With filesystem and source code repository integrations I can now triage, respond to, and resolve incidents without leaving Claude.

The Technical Magic Behind the Scenes

What makes this work so well is that MCP servers expose our infrastructure capabilities as tools that Claude can use naturally. When I ask about a Databricks job, Claude doesn't need me to specify which workspace—it can check the available workspaces and search across them. When investigating failures, it can pull logs, check cluster events, and analyze patterns without me having to navigate between different UIs.

The conversation flow feels natural because Claude maintains context throughout. It remembers which job we're discussing, what patterns we've already identified, and can build on previous findings without me having to re-explain everything.

Lessons Learned and Next Steps

I didn't need another on-call rotation to understand that context switching is the silent killer of efficiency. Most would agree that eliminating it doesn't just save time—it saves mental energy for actual problem-solving.

But the switch to MCP-powered operations has taught me a few things:

- Natural language interfaces shine for investigative work. When you're trying to understand a problem, being able to ask follow-up questions naturally is infinitely better than clicking through predetermined UI paths.

- The setup investment pays off immediately. It took maybe an hour to set up both MCP servers. I recouped that time in the first day of my on-call shift.

Next, I'm planning to add MCP servers for our non-Databricks infrastructure. The dream is to have Claude be able to correlate issues across our entire stack—imagine asking "Are there any AWS service disruptions that might be affecting our Databricks jobs?" and getting an immediate, comprehensive answer.

The Bottom Line

If you're doing ops rotations and find yourself drowning in tabs and context switches, give MCP + Claude a shot. Your 3 AM self will thank you, and you might even get back to that refactoring dream a little sooner.